Title: I Am Just A Human

Title: I Am Just A Human

Thesis:

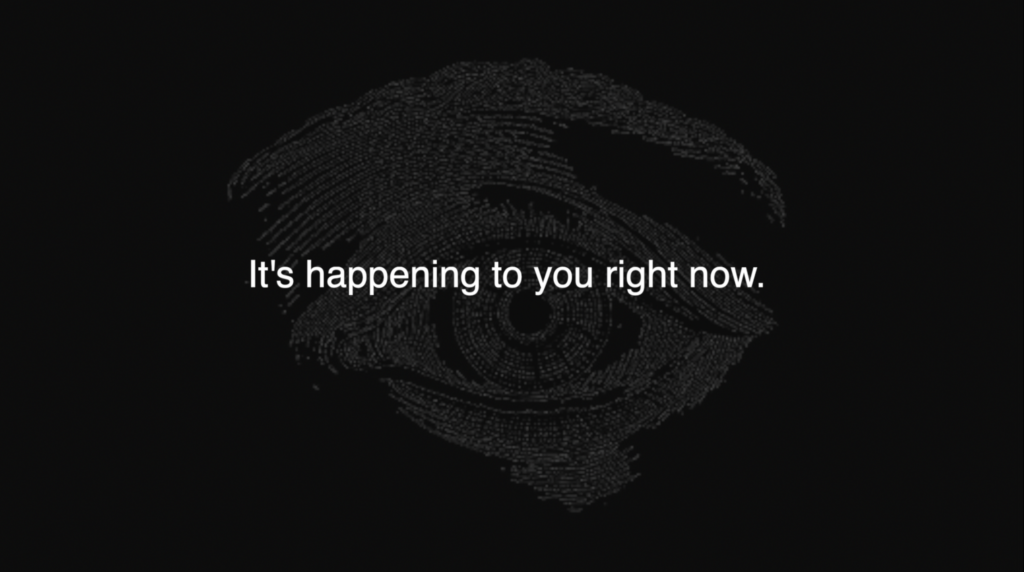

How accurate does your digital data determine what you are doing in everyday life?

How safe are you when you allow for your digital data to be viewed by the public?

My plan is to track one person and try to determine their common activities and interests. It will be a commentary on the Surveillance Capital Algorithm system.

I’ll then make a video of the person I “stalked” based on my guesses on their digital data.

James Bridle – Something is wrong on the internet

This topic to me is particularly frustrating for me because I have a little brother at the age of 5 who is potentially in danger of being exposed to content that could in some way damage his psyche, just because of the exploitation of the YouTube algorithms by people who will do anything for views. Something made to make more convenient and appropriate content has been exploited to the max and I think it somewhat is a representation of the horrors of humans (kind of like how platforms like the black market and the dark web exist satisfy messed up minds). I think that it’s very important to filter through the content that our kids will watch because if we allow the algorithms to determine what they watch next, we might accidentally cause some form of psychological damage that will be irreversible.

Do you think that there should be human regulators on this topic? Why has this content gone unnoticed for so long and what can we do to stop it from continuing any further?

Rachel Metz – There’s a new obstacle to landing a job after college: Getting approved by AI

In the pursuit of convenience, we can see the relationship between humans and technology through our dependency and trust in artificial intelligence. HireVue is a gatekeeping AI for entry-level jobs created to help companies determine whether an applicant is suited for their company. On the surface level, I can understand how this sort of system could be helpful, especially for large companies with a myriad of applicants to go through. However, this in itself presents the issue of possible errors as well as how a system is just a machine determined to find what it was told to find. It in itself does not have the capacity to have “empathy” and have an understanding of the connection that is present in in-person interviews. I think that it would be a good idea to have an algorithm or AI determine if the applicant fits the requirements in terms of data and experience, but NOT based on how they talk in a video.

Should a system like this persist or should we continue on with in-person interactions as a determining factor for employment?

Jia Tolentino – How TikTok Holds our Attention

TikTok has become such a prominent and influential part of our contemporary culture, it is no wonder that people want to know how it works. But even those who are closely don’t quite understand how it works. It keeps its users hooked by filtering through content and determining what sort of content you would be interested in based on your interaction with it. Of course, there’s this issue of what content the application is hiding from you as well. I just find it so fascinating how big and influential it has become and yet no one really knows how it fully works, and that in itself can present some social issues that we may not be fully aware of until much later.

Eric Meyer – Inadvertent Algorithmic Cruelty

This is a very personal account of how an algorithm created to “bring joy to its users” has led to a deeper cut in someone’s heartbreak. Algorithms in its core are just machines created for convenience, it was not intended to have the heart of a human. In some sense, I can’t quite blame algorithms for what they do, however, I do think if there is blame to be placed it should be on the humans who have created it, disregarding the emotional implications it can make on people’s lives. This is definitely something that should be monitored more or at least allow people to opt-out of something that might remind them of pain.

Rachel Thomas – The problem with metrics is a big problem for AI

Metrics are meant to be something useful to determine users’ interaction and interest in the content they consume, however, it is not always accurate. They can show us valuable information and data that can help in recommending things that the numbers say that we enjoy, however, there’s a lot of other things to take into account when considering the numbers. I think about the trending pages of YouTube and how I think it doesn’t actually reflect what users watch (as it rarely shows content that is actually ranking up in numbers by YouTubers that deserve that sort of recognition), but rather, they tend to present things that are likely gaining so many viewers from bots (like TroomTroom, a click-baiting life-hack channel that I personally think shits out shit content for views that they buy and the content is subpar compared to the myriad of great content creators out there. They appear on the trending pages A LOT and I find it very frustrating.)

Frank Pasquale – Black Box Society

There’s a repetition of this question when it comes to the transparency of our data being shared around, “should they tell us?”. With this rise of data sharing amongst big corporations as well as the government, we have created a world where it is organized by people who we can’t see but can see us. The book also poses a few critical strategies to “keeping the black boxes closed”. Real secrecy, legal secrecy, and obfuscation.

So going off of the question provided, how much of our information is being used should we be aware of? How would it affect our lives once we do know? Would it be easier to be blind to it?

Cathy O’Neill – The Era of Blind Faith in Big Data Must End

Algorithms built on the prospect of being a useful tool to determine success is a faulty approach. Algorithms are essentially just opinions embedded in code, and they reflect on our patterns and past practices as opposed to being the objective tool system that we had aimed it to be. As O’Neill put it, they automate the status quo. In order to bypass their silent and possibly detrimental actions, we have to check them for fairness. O’Neill lists them as having a data integrity check, audit the definition of success, consider accuracy, and recognize the long-term effects of algorithms.

Do you think that algorithms reflect on human biases? What other ways do you think that algorithms could potentially cause that could harm the lives of others?

Virginia Eubanks – Automating Inequality

Eubanks discusses the history and rise of the digital poorhouses and how it responds and recreates a narrative of austerity, the idea that there is not enough for everyone, so there’s this question of who deserves their human rights. She looks into the social services and how algorithms meant to aid the system to determine the people that are in need of help have actually caused a greater issue due to the history of how America treats the poor. She brings up an example where the system confuses parenting while poor as poor parenting, which exposes the system as a poverty profiling system that is a feedback loop of injustice.

She provides possible solutions to the issue, do you think that in the coming future we can somehow work together to override this issue and come to a better just future for the impoverished? Or do you think that this unfair system will continue to thrive for the benefit of the privileged and the detriment of the poor?

Janet Vertesi – My Experiment Opting Out of Big Data

I remember reading about this story in the past. In order to try and avoid getting bombarded with ads and suggestions by social media platforms regarding her pregnancy, Vertesi does her very best to essentially live an analog life. I commend her for the extent of work she has done to slide under the radar, however, it came with the cost of having tense relationships and the possibility of seeming like a criminal with the practices she was using.

Would you ever consider doing something like this to be under the radar of social media data collectors?

William and Lucas – The Computer Says No

I think that this is the perfect example of our reliance on technology. It is a simple yet effective execution of how we have been so trusting of our technology that we cannot see how it could cause an error, when in fact, just as humans, they can be just as flawed. There really isn’t a truly objective form of technology, as everything has been created to cater to our needs and have in some way shape or form, our tendencies to make mistakes as well. You can make a nearly perfect program, but biases from the creator can still be present, and we shouldn’t blindly believe everything the computer tells us just because we assume it is more reliable than humans.

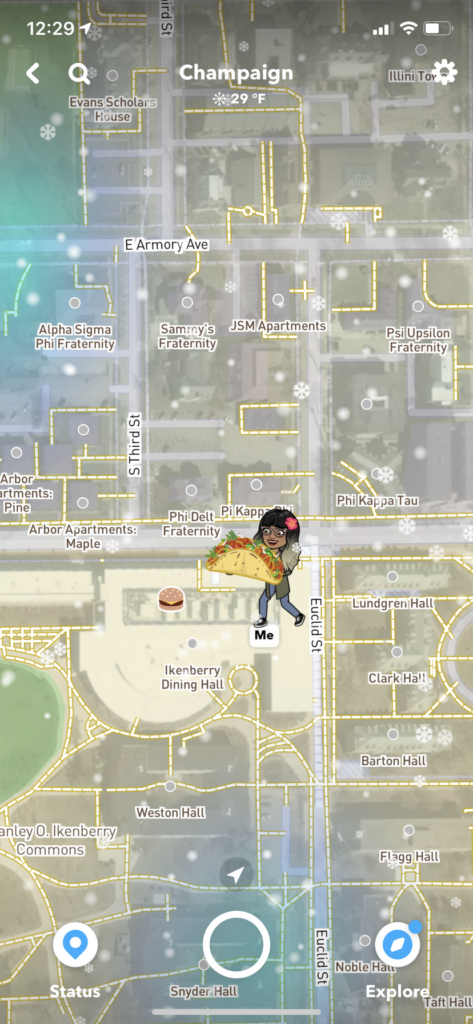

I want to focus on how our current location is being monitored and how technology somewhat determines what you do. My main example would be how your avatar or “Bitmoji” on the Snapchat map changes according to what it is assuming you are doing in that location. For example, when you are in a dining hall, it says you are eating. When you are listening to music, the avatar has headphones on.

I personally find it interesting yet disturbing that this is even a feature. I remember looking at the map and seeing their Bitmojis sleeping. To what extent does this go before it goes too far? The social media platform does not have to be Snapchat, however, it does have one of the best visual representations of my idea of playing around with what my phone thinks I’m doing at a location as opposed to what I am actually doing in real life.

Safiya Noble – Algorithms of Oppression

In this article, Noble presents the underlying issue of Google’s search algorithm that contains elements of sexist and racist results when certain keywords are looked up. A prime example is the derogatory search results that appear when looking for women of color (most pertaining to the sexualization of them as well as overall pornographic fetishization of that group). She also brings forth another example of the difference in search results when looking up “three white teenagers” vs. “three black teenagers”, and how Google’s response to that backlash (as well as many others) has been discrete and somewhat unapologetic.

How can we as users of this search engine combat this type of oppression? Bring awareness of it to the masses?

Ruha Benjamin – Race After Technology

Benjamin presents the ever-present issue in the technology that we have created that is making it somewhat racist despite it being inherently an objective form. This is due to the fact that there are biases present in the creators, which in turn translate themselves into their creations. She presents the concept of the Jim Code (derived from the Jim Crow laws), where racist methods of oppression exist in the code that many of us end up using. She presents multiple examples of technology that utilizes that. When oppression thrives, retaliation by the oppressed will rise with it, and so she talked about the racial justice movement groups that have emerged to combat this phenomenon.

How can technology in the future be barred from the biases of their creators in order to create something more objective?

Lisa Nakamura – Laboring Infrastructures

VR is not something necessarily new in the industry, however, relative to other existing technologies it is still in its early stages of development, meaning no one really knows what powers it truly holds and how it will affect our future. And with that, many different kinds of experiments and tests have been done to test the capabilities of making a virtual space that distorts the user’s perception of reality, and with that comes artificially constructed empathy. Even with that, there’s an underlying issue of constructing these virtual realities to provide users an experience they would not have experience on their own, which is that many creators have taken upon themselves to simulate oppressive situations of the marginalized, without really taking into account the consequences of that.

What can be done to prevent these constructions of reality from going “too far”?

Nathan Jurgenson – The Social Photo

With the rise of new media platforms that allow the vast majority of people to socially interact with each other in a new format comes to this social change that impacts the way we “socially” view the world. Jurgenson proposes that in order to understand this change in the interaction we must look into the history of social media and its relationship with social photography. He goes into detail the history of photography itself and how the definition of what constitutes photography has now been a subject of debate due to the accessible and popular social media applications like Instagram that has somewhat redefined the act of documentation. Now, people all over the world can photograph and document every aspect of their lives, which is a drastic change to how photography had been limited to the “professionals” in the past. Jurgenson also brings up this aspect of the need to create an illusion of decay and age through the use of filters on Instagram that highlights people’s desire for authenticity.

To what extent can something that is posted on Instagram truly be considered as “photography” in terms of some expert standards? What are the requirements of that? Can ANYONE be a photographer?

Jill Walker Rettberg – What can’t we measure in a quantified world?

This talk highlights the interesting aspect of quantifying data that persists in our society today. About anything and everything that you can imagine can be quantified into numbers that in turn can impact the way we go about life. An example that Rettberg had used is the data collected from her Fitbit that displays the number of steps she had taken in a day, and how when she noticed that one day she wasn’t walking the recommended amount of steps on a certain day, she actively changed her routine to make herself move more which in turn gave her more steps. This is just an example of how numbers can make us decide to change our lives. She brings up multiple examples of quantifying data devices that are created or are in the process of creation that is meant to add convenience to ourselves.

Do you think that in the future, such devices would be so integrated into our daily lives that it would be the new normal to worry about our “life stats” every minute?

Ben Grosser – What do Metrics Want? How Quantification Prescribes Social Interaction on FB

Numbers and metrics have now become an important aspect of our lives due to different social media that utilizes those quantifiers in likes, shares, and comments like Facebook. In this article, Grosser goes into detail the strong desire for more that persists in the users of Facebook that ties together with the capitalistic tendencies of the society that dwells in it. More likes and shares equal to more self-worth, exchange value = personal worth. He then details the browser extension he had created to combat this persistence and bring to light our dependencies on the numbers through his creation, the Facebook Demetricator.

Do you think that society will ever move past the desire and need for more in terms of depending on the metrics that persists through social media?

Wendy Chun – Programmed Visions

Software is a part of new media that opens up many doors to explore the complexity of the world. There is merit to understanding the intricacies of this medium, as the article puts it, understanding software is in some way a form of enlightenment. There is a sort of relationship between visibility and invisibility within the medium that makes it a powerful force in the new media age. There is still this question of how it truly works, as a big part of it is still unknown to us and that’s something to explore and discover within this new and growing tech that is emerging.

My question is, is this something to be explored on an individual basis? What are the tools that we should acquire beforehand to get a better and deeper understanding of the mechanisms of software?

Matthew Fuller – How to be a Geek

This article is doing the opposite of what we’d consider gatekeeping, in that it is suggesting that the culture and community surrounding the software and technical aspect of the world is not just limited to the experts and the ‘geeks’ that are enthused by the evergrowing interest of software. Although ‘geeks’ are known to be somewhat like the face of the internet obsessors, the actuality of the situation is that everyone is open to explore the knowledge of software, regardless of expertise. We are all allowed to ‘geek’ out. This article also discusses the relationship between technology and humans, in that the contemporary technology we know of is “not an extension of man”, rather, it is a complex technical medium that aids in our ability to understand the world more.

My questions for this would be, is there a way to present the knowledge of software and technology in a more accessible and less intimidating way to reach a wider range of people? Should everyone be thought more about the usage of software in school and everyday life? What can we do to remove the stigma of being a ‘geek’ so that people are less apprehensive about the idea of technological exploration?

Geert Lovink – Sad by Design

This article introduces and discusses the lack of control we actually have when it comes to the extremely complex and intricate technology we possess in our pockets, and how our experience with it has been monopolized and capitalized to benefit the provider as opposed to the user. Major corporations have successfully captivated their users to succumb to the need to use their apps and tech through means of behavioral analyses that they have used to develop addicting tech. The youth, especially, are so engrossed in their phones that they are bound to check it every so often and this is because these capitalist organizations have figured out the best way to sink us deep into the rabbit hole that is a technological obsession. They have also honed into the inciting emotions (sadness, in particular, commonly extracted from female users) to keep us drawn in.

What can we do to bring awareness to this capitalist scheme for other users so that we can collectively learn to un-engross ourselves in technology?

Soren Pold – New ways of hiding: Towards meta interface realism

Within the complexities of the worldwide web comes the question of who is actually in control of being seeing and being seen. In this article, the author goes over different ways the meta interface collects data from everyday users and does so under a camouflage that makes it hidden from everyone else unless it is actively being searched for. This interfacing is both voluntary, (meaning it is present in the terms and conditions that the user can agree to) and involuntary (where there are activities that happen in the background that tracks and stores user activity and information without them even realizing it). The article introduces a possible method to explore and find out about how this happens through the form art and presents several artists who have explored and successfully presented the myriad of ways the meta interface tracks us in the dark.

What can regular users do to become more aware of this occurrence as well as protect our own information and be more secure about it to the best of our ability?