Cathy O’Neill – The era of blind faith in big data must end (Ted Talk, 13m)

****Cathy talks about data laundering, a process in which technologies hide ugly truths inside black box algorithms and call them objective. We are calling these things “meritocratic” when they are in fact non-transparent, and have the importance and power to wreak destruction. They are in-fact, if we think of one of the worst possible outcomes, mass-weapons of destruction. Except this is not “revolution, but evolution”. This adaptation of technology that integrates meta interfaces for data laundering is only revolutionizing and codifying biases that have been present in our society long before contemporary codes were able to solidify and exacerbate them.

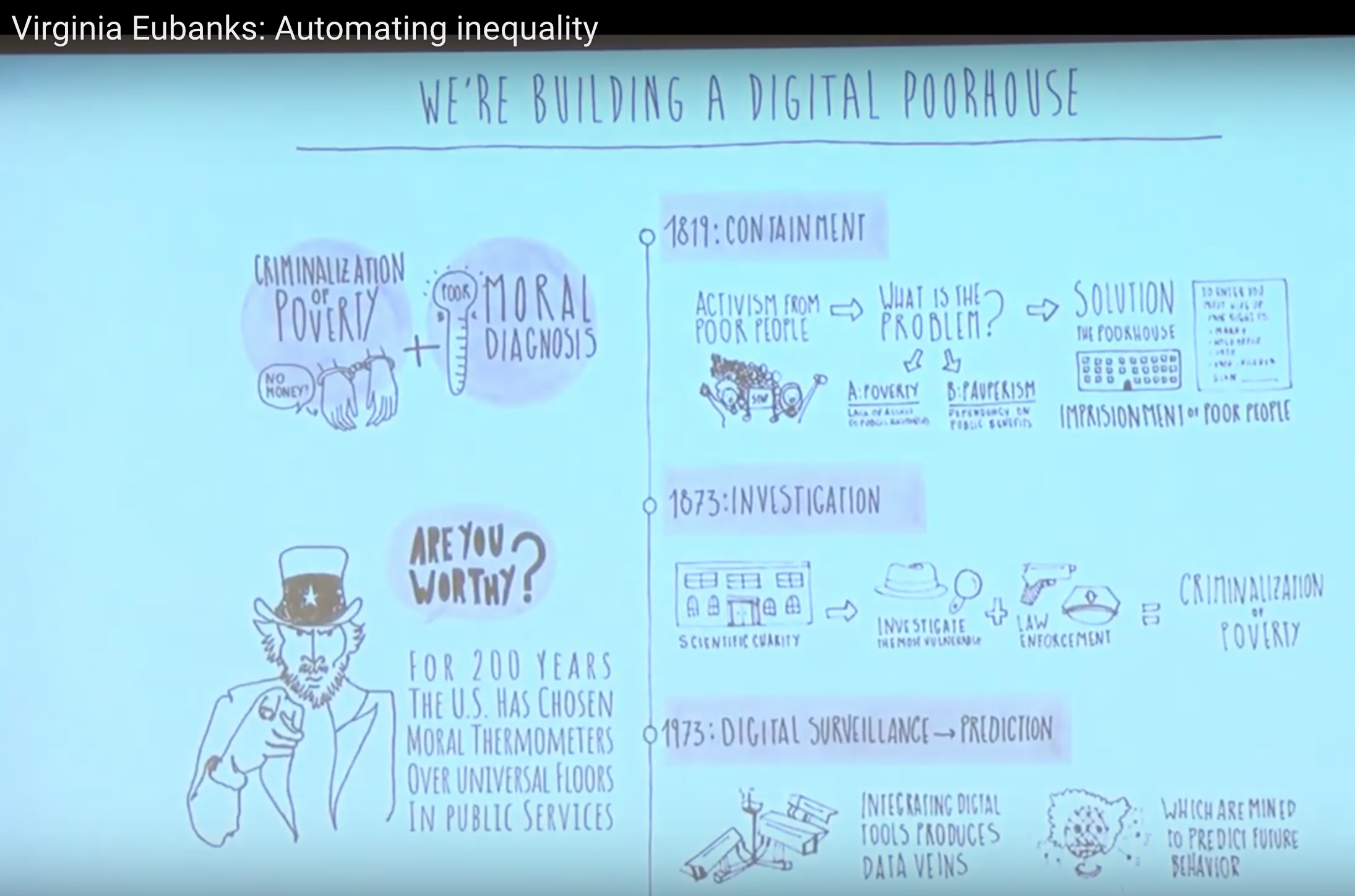

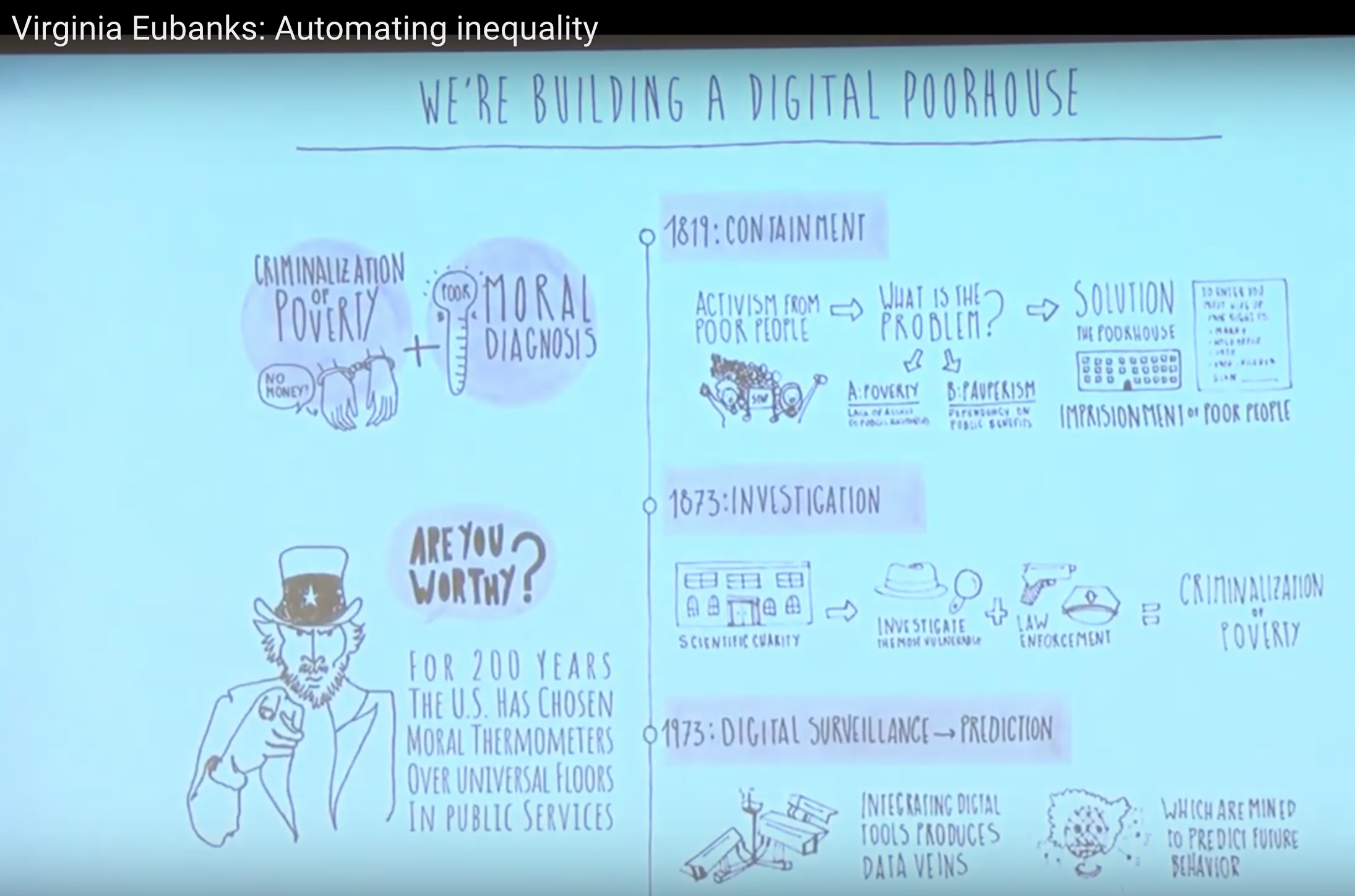

Virginia Eubanks – Automating Inequality (talk, 45m)

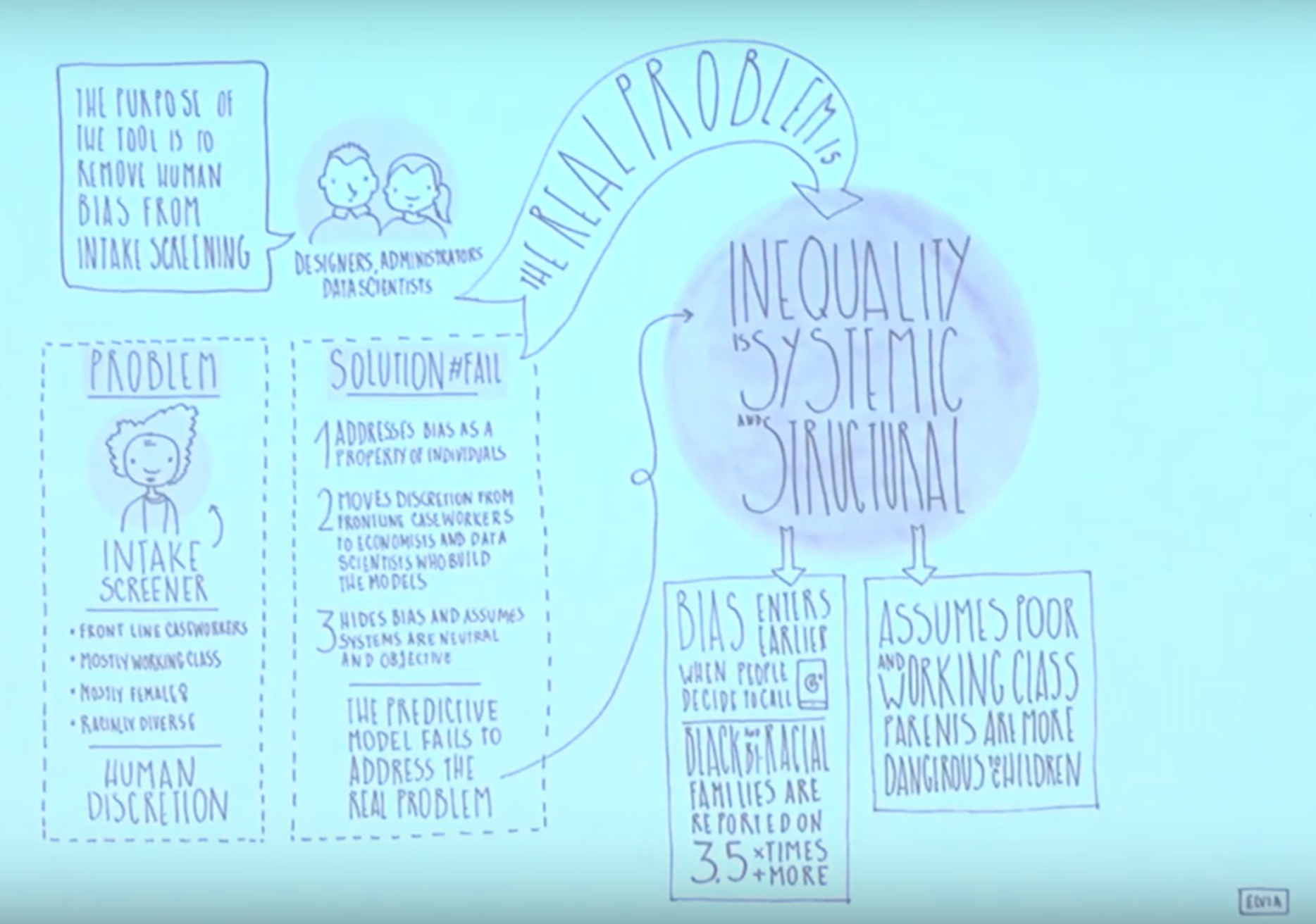

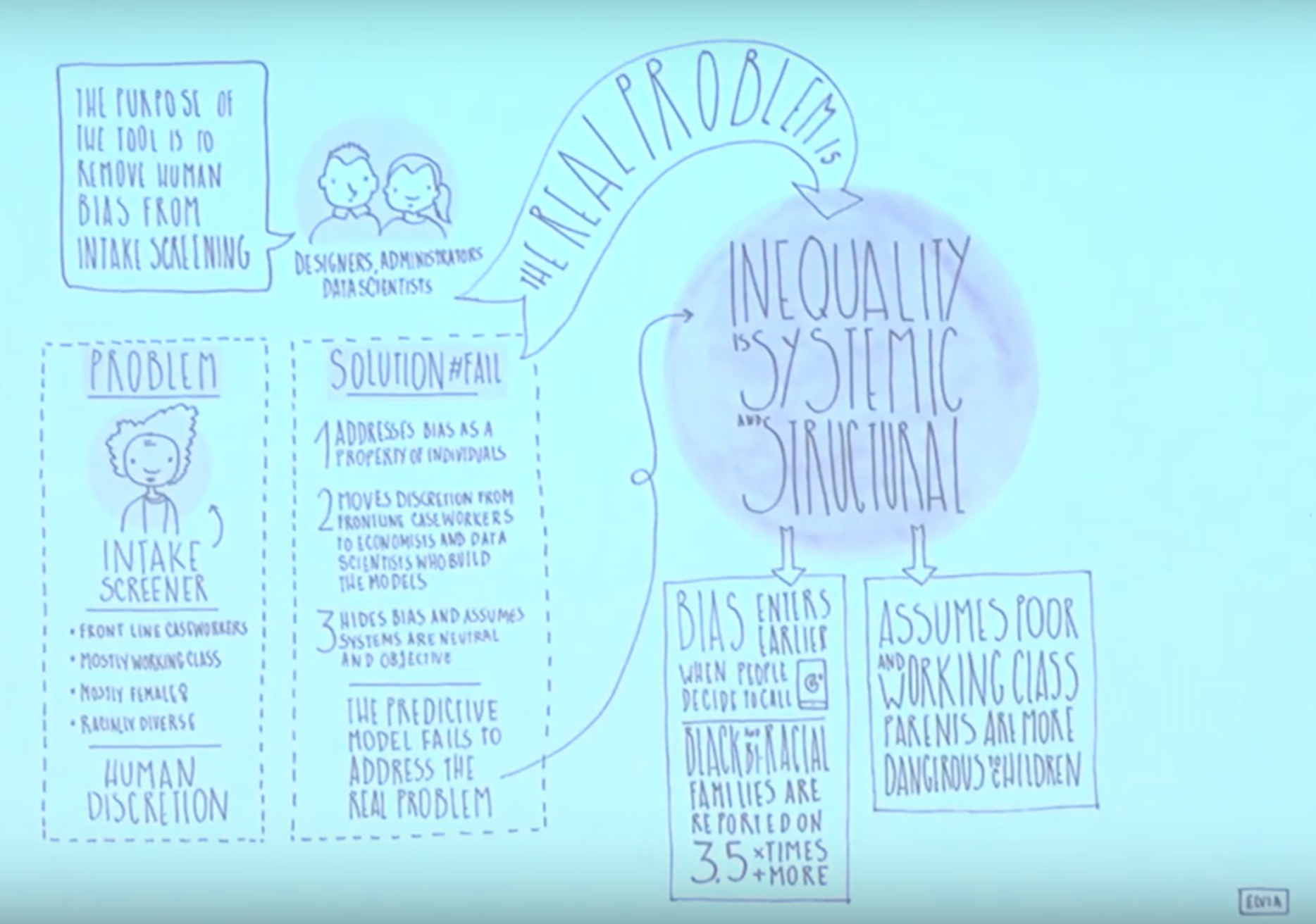

****Virginia Eubanks talks about Automating Inequality as a creation of an insititution that upholds this “idea of austerity in which there is seemingly not enough resources for everybody, and that we have to make really hard choices about who deserves to attain those basic rights to get those resources”. She talks about the feedback loops of inequality that live in database storages and assume this idea of austerity, therefore looping it back into the system. Something very important she talks about is how the feedback loops create false negatives and false problems, which are the dangerous and debilitating parts of how this data can be used against individuals in favors of others.

Frank Pasquale – Black Box Society – chapter 1 (pp 1-11)

—ABOUT BIG DATA and how there are multiple hidden layers of “firewalls” to get through, if you were to start calling out firms for being more transparent, for example, you would still have to get through all that incredibly hard to understand contract-jargon, that might end up being the discouragement needed to not press the subject further, “However, transparency may simply provoke complexity that is as effective at defeating understanding as real or legal secrecy”.

Transparency is not just an end in itself, but an interim step on the road to intelligibility.”

Janet Vertesi – My Experiment Opting Out of Big Data… (Time, short article)

This experiment is based on a woman using using a site called Tor to source random servers for her searches. She did this because she wanted to evade the millions of data bots that would have invaded her online and in person existence through data referrals or advertisements. She used this site to bought everything in cash using gift cards to purchase things on amazon, and had to act like a criminal, using these gift cards via a site that is known for illicit activity.

This may seem insignificant, so what if companies send me coupons for baby stuff, but this exact same kind of “false negative”, it is, “—paved with the heartwarming rhetoric of openness, sharing and connectivity—actually undermines civic values and circumvents checks and balances”.

Walliams and Lucas – The Computer Says No (comedy skit, 2m)

***I feel like this is something my parents would complain about, especially in hospital or administrative settings in which unnecessary bureaucracy takes places, and everything is handled through paperwork or computers, passed of by real hands, but only as a middleman. The idea of having a middle-person there for all these bereaucracy processes helps ease and alleviate the thought that these centers of “help” and importance (hospitals, DMVS, tax centers, banks, etc) are just databases that act as black boxes, storing our data as willingly or unwillingly as we give it.

NOTES:

Cathy O’Neill – The era of blind faith in big data must end (Ted Talk, 13m)

****Cathy talks about data laundering, a process in which technologies hide ugly truths inside black box algorithms and call them objective. We are calling these things “meritocratic” when they are in fact non-transparent, and have the importance and power to wreak destruction. They are in-fact, if we think of one of the worst possible outcomes, mass-weapons of destruction. Except this is not “revolution, but evolution”. This adaptation of technology that integrates meta interfaces for data laundering is only revolutionizing and codifying biases that have been present in our society long before contemporary codes were able to solidify and exacerbate them.

Separates winners from losers

Or a good credit card offer

You choose the success of something

Algorithms are opinions embedded in code

Reflect our past patterns

Automate the status quo

That would work if we had a perfect world

But we don’t, we are reinforcing what we put in, our own collective memories and biases

They could be codifying sexism

Or any kind of bigotry

DATA LAUNDERING

Its a process by which:

- Technologists hide ugly truths

- Inside black box algorithms

- And call them objective

Call them meritocratic

When they are secret, important, and destructive

Weapons of mass destruction

These are private companies

Building private algorithms

For private ends

Eve the ones I talked about with police and teachers

Those are built by private institutions

And sold to the government

This is called: Secret Sauce

PRIVATE POWER

They are profiting from wielding the authority of the inscrutable (impossible to understand or interpret)

Now you might think

Since all this stuff is private

Maybe the free market will solve this

There is a lot of money in unfairness

Also were not economic rational agents

Were all racist and bigoted in ways that we don’t understand and know

We can check them for fairness

Algorithms can be interrogated

And they will tell us the truth every time

This is an algorithmic audit

1.Data integrity check

- Definition of success; audit that

Who does this model fail?

What is the cost of that failure?

We need to think of: The long-term affects of algorithms, feed-back loops that are enacted.

Data scientists

We should not be the arbiters of truth

We should be interpreters of the voices…

__ VIRGINIA EUBANKS Automating Inequality

Virginia Eubanks – Automating Inequality (talk, 45m)

****Virginia Eubanks talks about Automating Inequality as a creation of an insititution that upholds this “idea of austerity in which there is seemingly not enough resources for everybody, and that we have to make really hard choices about who deserves to attain those basic rights to get those resources”. She talks about the feedback loops of inequality that live in database storages and assume this idea of austerity, therefore looping it back into the system. Something very important she talks about is how the feedback loops create false negatives and false problems, which are the dangerous and debilitating parts of how this data can be used against individuals in favors of others.

We are creating an institution

This idea of austerity

That there is not enough for everybody

And that we have to make really hard decisions/choices about who deserves to attain their basic human rights

Tools of AUTOMATING INEQUALITY

More part of evolution

evolution than revolution

Their historical roots go back all the way to 120’s

- Digital poorhouse assumes austerity (by assuming it it recreates it)

- DATAWAREHOUSES- private healthcare stores that information secretly, public health databases may not / do not

- If you can afford to pay your babysitter out of pocket, then that information about your family will not end up in the Data Warehouse

False positives problems

seeing risk of harm where no harm actually exist

the system confusing system of parenting while poor, with poor parenting

creating a system of Poverty profiling- spent so much time investigating and risk-rating families in their communities, created a feedback loop of i justice

that began with

-families getting more data collected about them because they were interacting with county systems

-having more interactions meant their score was higher

-having their score higher meant they were investigated more often, which means they were investigated more often

-and because they were investigated more data was collected on them and so forth so on the loop continues and data collection grows

feedback loop- same as predictive policing

FALSE NEGATIVES

Not seeing issues where issues might actually exist

Because there is barely any data on abuse in upper and middle class families in the data warehouse, the kinds of behaviors that might lead to abuse or neglect in those families can be ignored or misinterpreted by this algorithm because its not logged

Geographically isolated places, or suburbs (misses key opportunities to outreach to places like these

Discrimination is occurring or getting initiated the most within the community, is when the COMMUNITY calls in to share those cases with the Child Welfare phone responders

-When call screeners receive these, there is also a bit of disproportion that happens in that moment

Referral – which is embedded in our cultural understandings of what a safe and healthy family looks like, and in the United States, that family looks white, heterosexual, and rich,

-Removing discretion from those online workers could remove a stop, to the massive amount of discrimination thats entering earlier in the process, and could potentially worsen inequality in the system rather than making it better

Discretion- energy

Never created or destroyed, its just moved

Who are we taking discretion away from, who are we giving it to?

-Removing discretion from frontline child welfare workers that make up a large amount of the diverse women work force

Giving it to the economists, computer engineers, and social scientists who are building the models

These tools, at their worst, can serve as an ~empathy override~

We’re allowing us to outsource to computers some of the most difficult problems and decisions we face as a society

Coordinated Entry System (used around the country, around the world)

Responds to the county’s extraordinary housing crisis

-works by assigning each unhoused person who they have managed to survey a number/score that falls on a spectrum of vulnerability

VISPIDAD

Vulnerability Index and Service Prioritization Assistance Tool

-Serves those at top of scale, chronically homeless,

-Serves those at the bottom of the scale, new homeless who need just little to help get back on their feet

Labeled as: not vulnerable enough to merit immediate assistance, BUT

Not STABLE enough to be served by the time-limited resources of rapid RE-housing

Leave people feeling as if they are included in a system that asks people to incriminate themselves in turn for a higher lottery number in the system

-Give folks your data and HOPE you get matched with a better housing opportunity

-or close yourselves out of most housing resources in the community at all

Data management from survey of the organization is shared with a 161 organizations, and because of federal law and databases, one of those orgs is the LAPD (homeless management information system)

___BLACK BOX SOCIETY

There is even an emerging fi eld of “agnotology” that studies the “structural production of ignorance, its diverse causes and conformations, whether brought about by neglect, forgetfulness, myopia, extinction, secrecy, or suppression.”

But what if the “knowledge problem” is not an intrinsic aspect of the market, but rather is deliberately encouraged by certain businesses? What if fi nanciers keep their doings opaque on purpose, precisely to avoid or to confound regulation? That would imply something very different about the merits of deregulation. The challenge of the “knowledge problem” is just one example of a general truth: What we do and don’t know about the social (as opposed to the natural) world is not inherent in its nature, but is itself a function of social constructs. Much of what we can fi nd out about companies, governments, or even one another, is governed by law. ****

Laws of privacy, trade secrecy, the so- called Freedom of Information Act— all set limits to inquiry. They rule certain investigations out of the question before they can even begin. We need to ask: To whose benefi t?

Some of these laws are crucial to a decent society. No one wants to live in a world where the boss can tape our bathroom breaks. But the laws of information protect much more than personal privacy. They allow pharmaceutical fi rms to hide the dangers of a new drug behind veils of trade secrecy and banks to obscure tax liabilities behind shell corporations. And they are much too valuable to their benefi ciaries to be relinquished readily.

Even our po liti cal and legal systems, the spaces of our common life that are supposed to be the most open and transparent, are being colonized by the logic of secrecy. The executive branch has been lobbying ever more forcefully for the right to enact and enforce “secret law” in its pursuit of the “war on terror,” and voters contend in an electoral arena fl ooded with “dark money”— dollars whose donors, and whose infl uence, will be disclosed only after the election, if at all.6

But while powerful businesses, fi nancial institutions, and government agencies hide their actions behind nondisclosure agreements, “proprietary methods,” and gag rules, our own lives are increasingly open books. Everything we do online is recorded; the only questions left are to whom the data will be available, and for how long.

Knowledge is power. To scrutinize others while avoiding scrutiny oneself is one of the most important forms of power.8 Firms seek out intimate details of potential customers’ and employees’ lives, but give regulators as little information as they possibly can about their own statistics and procedures.

Sometimes secrecy is warranted. We don’t want terrorists to be able to evade detection because they know exactly what Homeland Security agents are looking out for.12 But when every move we make is subject to inspection by entities whose procedures and personnel are exempt from even remotely similar treatment, the promise of democracy and free markets rings hollow. Secrecy is approaching critical mass, and we are in the dark about crucial decisions. Greater openness is imperative. (No transparency!)

Financial institutions exert direct power over us, deciding the terms of credit and debt. Yet they too shroud key deals in impenetrable layers of complexity. In 2008, when secret goings- on in the money world provoked a crisis of trust that brought the banking system to the brink of collapse, the Federal Reserve intervened to stabilize things— and kept key terms of those interventions secret as well. Journalists didn’t uncover the massive scope of its interventions until late 2011.13 That was well after landmark fi nancial reform legislation had been debated and passed—without informed input from the electorate— and then watered down by the same corporate titans whom the Fed had just had to bail out.

Deconstructing the black boxes of Big Data isn’t easy. Even if they were willing to expose their methods to the public, the modern Internet and banking sectors pose tough challenges to our understanding of those methods. The conclusions they come to— about the productivity of employees, or the relevance of websites, or the attractiveness of investments— are determined by complex formulas devised by legions of engineers and guarded by a phalanx of lawyers.

Frank Pasquale – Black Box Society – chapter 1 (pp 1-11)

—ABOUT BIG DATA and how there are multiple hidden layers of “firewalls” to get through, if you were to start calling out firms for being more transparent, for example, you would still have to get through all that incredibly hard to understand contract-jargon, that might end up being the discouragement needed to not press the subject further, “However, transparency may simply provoke complexity that is as effective at defeating understanding as real or legal secrecy”.

Transparency is not just an end in itself, but an interim step on the road to intelligibility.”

***So why does this all matter? It matters because authority is increasingly expressed algorithmically.22 Decisions that used to be based on human refl ection are now made automatically.

Software encodes thousands of rules and instructions computed in a fraction of a second. Such automated pro cesses have long guided our planes, run the physical backbone of the Internet, and interpreted our GPSes. In short, they improve the quality of our daily lives in ways both noticeable and not

The same goes for status updates on Facebook, trending topics on Twitter, and even network management practices at telephone and cable companies. All these are protected by laws of secrecy and technologies of obfuscation.***

Though this book is primarily about the private sector, I have called it The Black Box Society (rather than The Black Box Economy) because the distinction between state and market is fading****CAPITALISM IS TURNING INTO GOVERN. PROXYS

We are increasingly ruled by what former politi cal insider Jeff Connaughton called “The Blob,” a shadowy network of actors who mobilize money and media for private gain, whether acting offi cially on behalf of business or of government.24 In one policy area (or industry) after another, these insiders decide the distribution of society’s benefi ts (like low- interest credit or secure employment) and burdens (like audits, wiretaps, and precarity).

But a market- state increasingly dedicated to the advantages of speed and stealth crowds out even the most basic efforts to make these choices fairer.

Obfuscation involves deliberate attempts at concealment when secrecy has been compromised. For example, a fi rm might respond to a request for information by delivering 30 million pages of documents, forcing its investigator to waste time looking for a needle in a haystack.17 And

the end result of both types of secrecy, and obfuscation, is opacity, my blanket term for remediable incomprehensibility.18

However, transparency may simply provoke complexity that is as effective at defeating understanding as real or legal secrecy.